DeepSeek-V3: Diagrammed

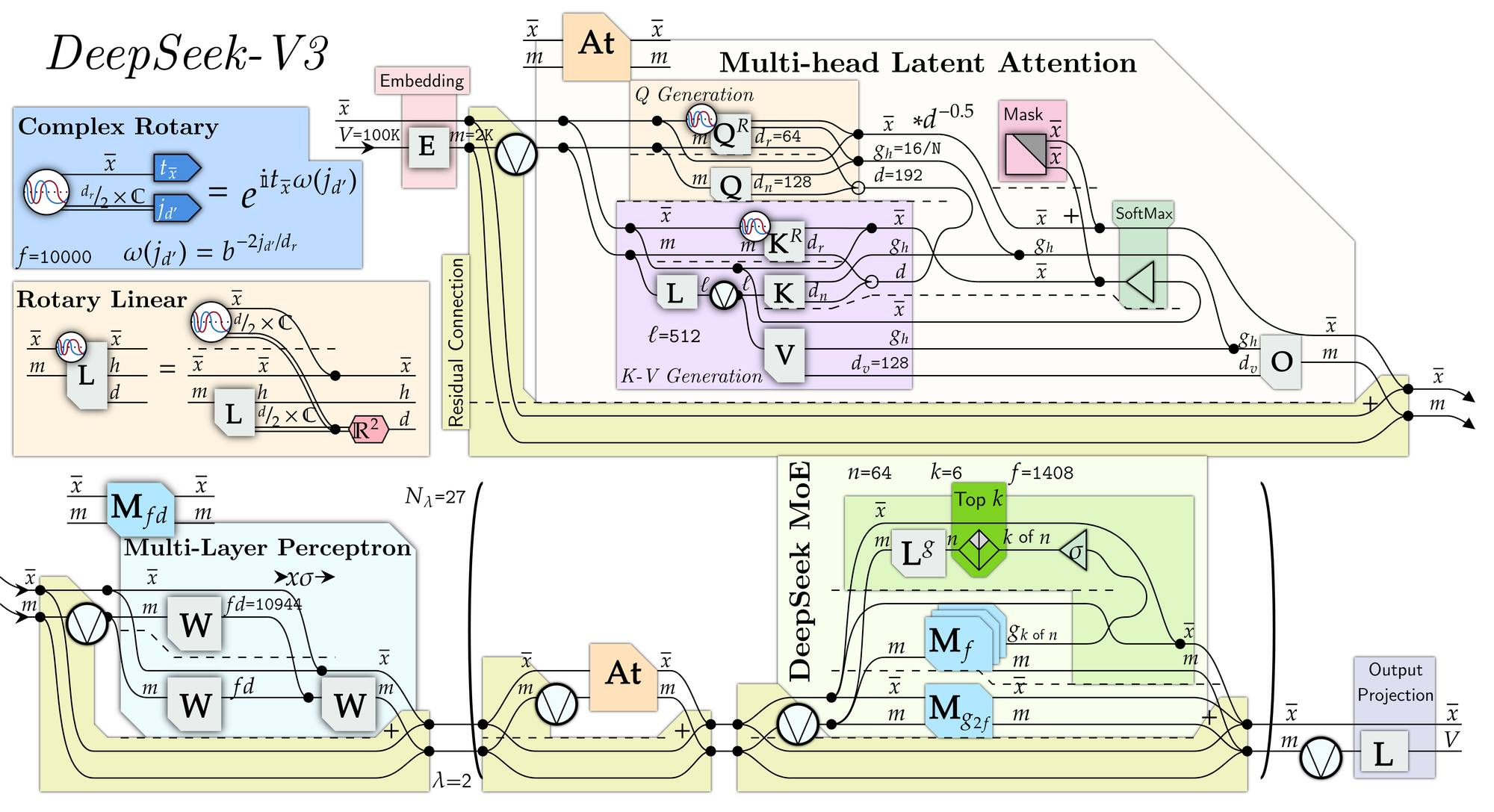

Quick post about a diagram I released on X a few weeks ago! It's DeepSeek-V3 - a. On the leaderboards, it's performing better than the May version of GPT-4o, indicating that open-source models are less than 7 months behind OpenAI.

Here's the standard version (X link):

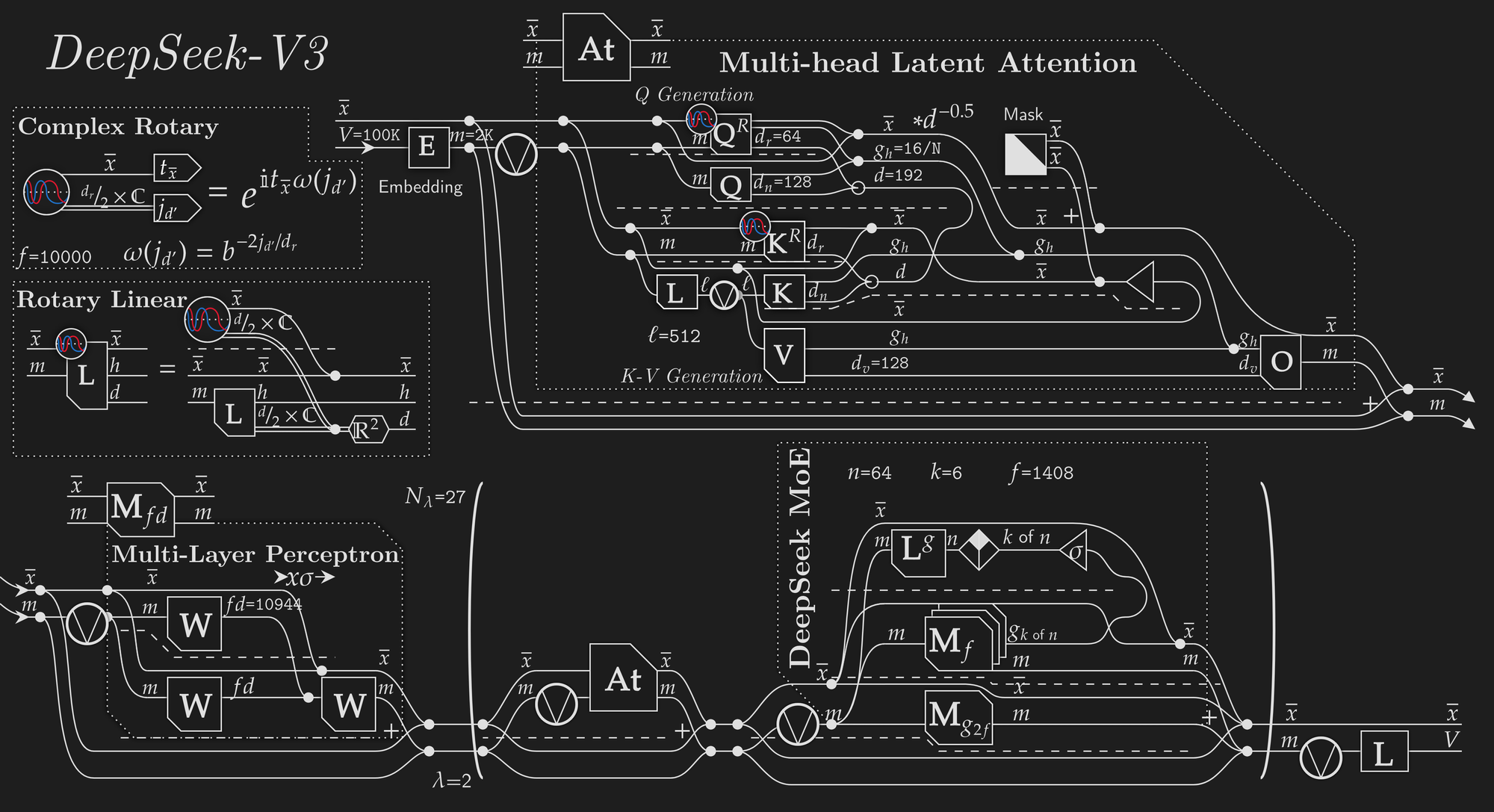

I've also made a dark mode edition because I think it looks nice (X link):

It's interesting to compare it to Mixtral-8x7B, the top open-source model from a year ago. DeepSeek-V3 is very similar actually, except it uses DeepSeek's Multi-head Latent Attention, involving a partial rotary embedding over the Q and K vectors and a LoRA-type low dimensional key and query generator instead of grouped query attention. It also uses a wider but more targetted mixture of experts, with a normalized sigmoid gate instead of a normalized exponent (SoftMax).

If people are interested, I could probably make a poster/t-shirt design as well.